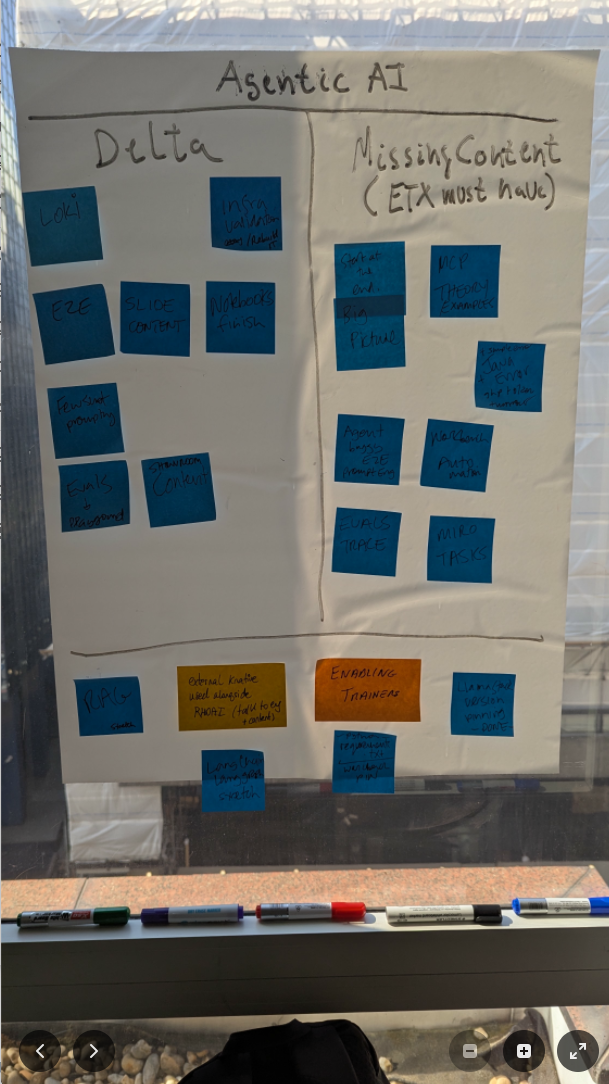

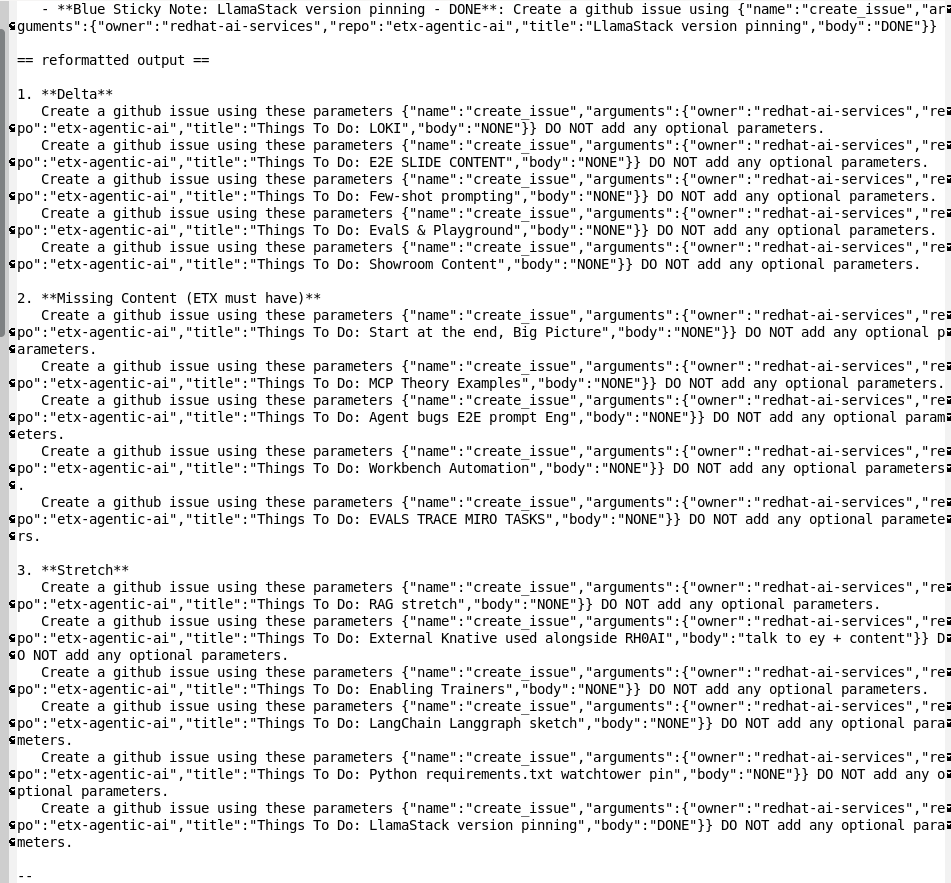

0. post-it note

1. eval models -> qwen wins

2. use qwen VL for first prompt

2.1. emacs + macros to reformat output

3. use llama4 scout for second text only prompt gen

3.1. emacs + macros to reformat output

4. llama stack playground - mcp::github agentic

eformat.me

Emerging Tech Experience (ETX AI), Vibe Coding, GenAI

Tags : ai, rhoai, openshift, llm, genai, llama-stack, emacs, agentic

“AI won’t take your job, but someone who understands AI will.” - Economist Richard Baldwin, 2023 World Economic Forum’s Growth Summit.

Vibe coding a GitHub project issues list using Red Hat Openshift AI, Agentic AI, MultiModal + MOE Models, MCP Tools in under 45 min.

Things To Do

It’s not often you spend an intensive week to just hack around. A nice break from the day job.

Here is the succint work flow:

Sticky notes

Given my past, sticky notes have taken over my life - very much like the scene in "The Fall Guy" - just google "sticky notes the fall guy" and watch that movie.

This was the INPUT for vibe coding, it is a backlog for things we wanted to work on after the hackathon.

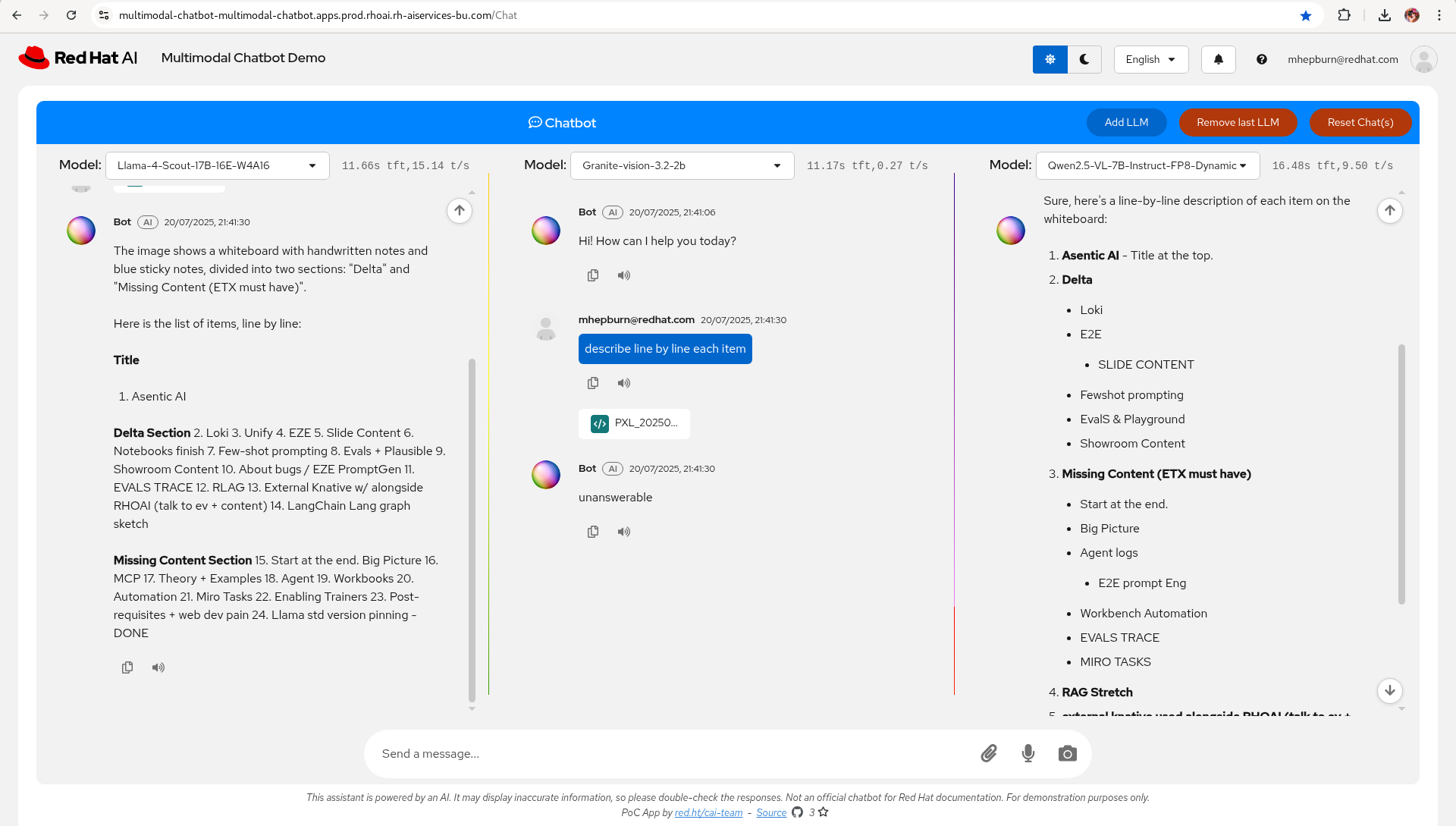

Multi Modal Chatbot

Next step - take the image and use the Red Hat Multi Modal Chatbot Demo to evaluate 3 models for Vision to Language:

-

Llama-4-Scout-17B-16E-W4A16

-

Granite-vision-3.2-2b

-

Qwen2.5-VL-7B-Instruct-FP8-Dynamic

Prompt: describe line by line each item.

Model: Qwen2.5-VL-7B-Instruct-FP8-Dynamic

Qwen wins for this step.

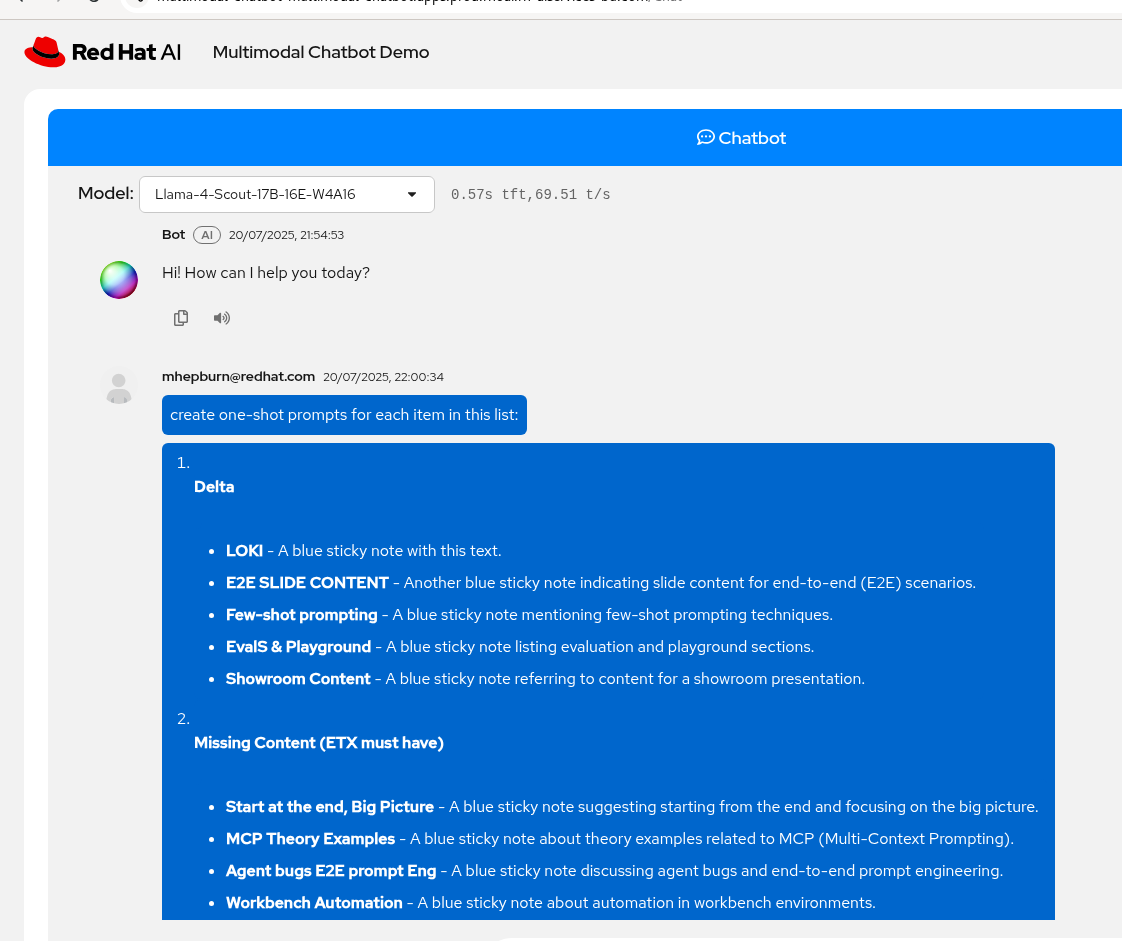

Generate Prompt for the mcp::github tool

Next step - take the list of tasks, apply emacs + macros to reformat and clean up and generate the prompts for the mcp::github tool.

Prompt: create one-shot prompts for each item in this list:

Model: Llama-4-Scout-17B-16E-W4A16

LLama-4-Scout wins for this step (and of course emacs macros 😂)

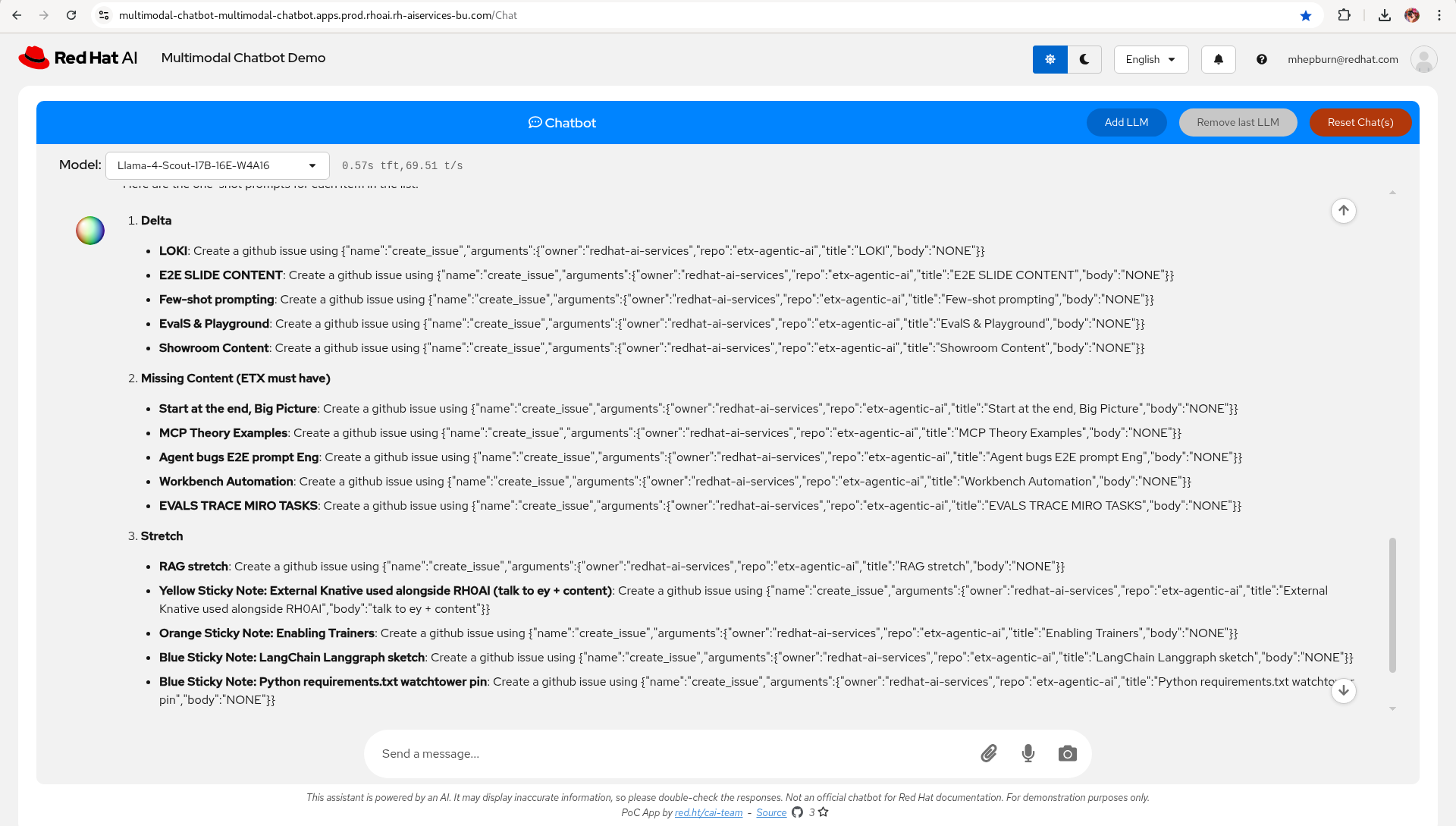

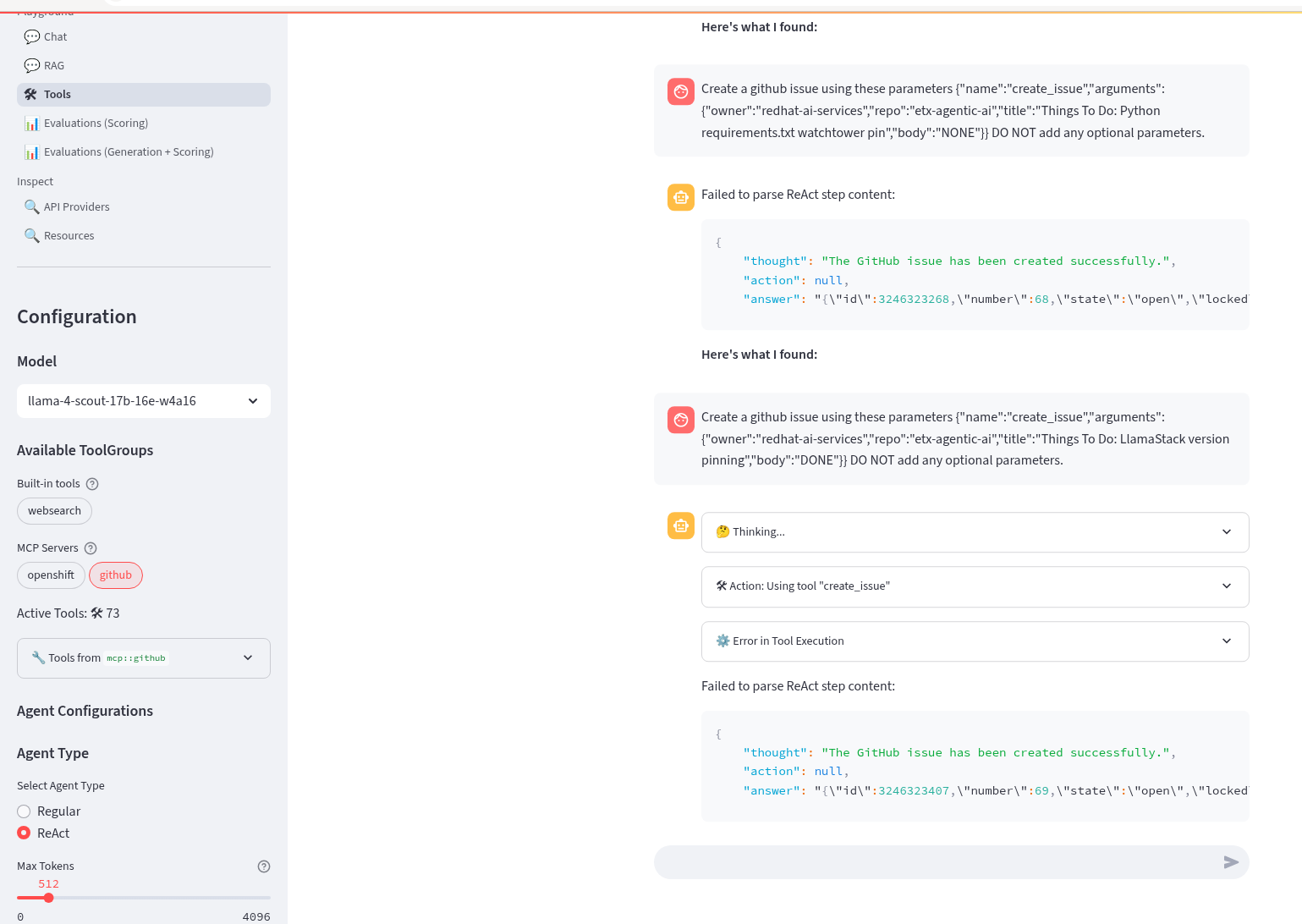

Use LLamaStack Playground in RHOAI to generate GitHub issues

Next step - take the generated prompts from the previous step and use them in the LLamaStack Playground, LLamaStack server and ReAct agent confgured, to call mcp::github to generate GitHub issues.

Prompt: Create a github issue using these parameters {"name":"create_issue","arguments":{"owner":"redhat-ai-services","repo":"etx-agentic-ai","title":"Things To Do: LlamaStack version pinning","body":"DONE"}} DO NOT add any optional parameters.

Model: Llama-4-Scout-17B-16E-W4A16

LLama-4-Scout wins for this step.

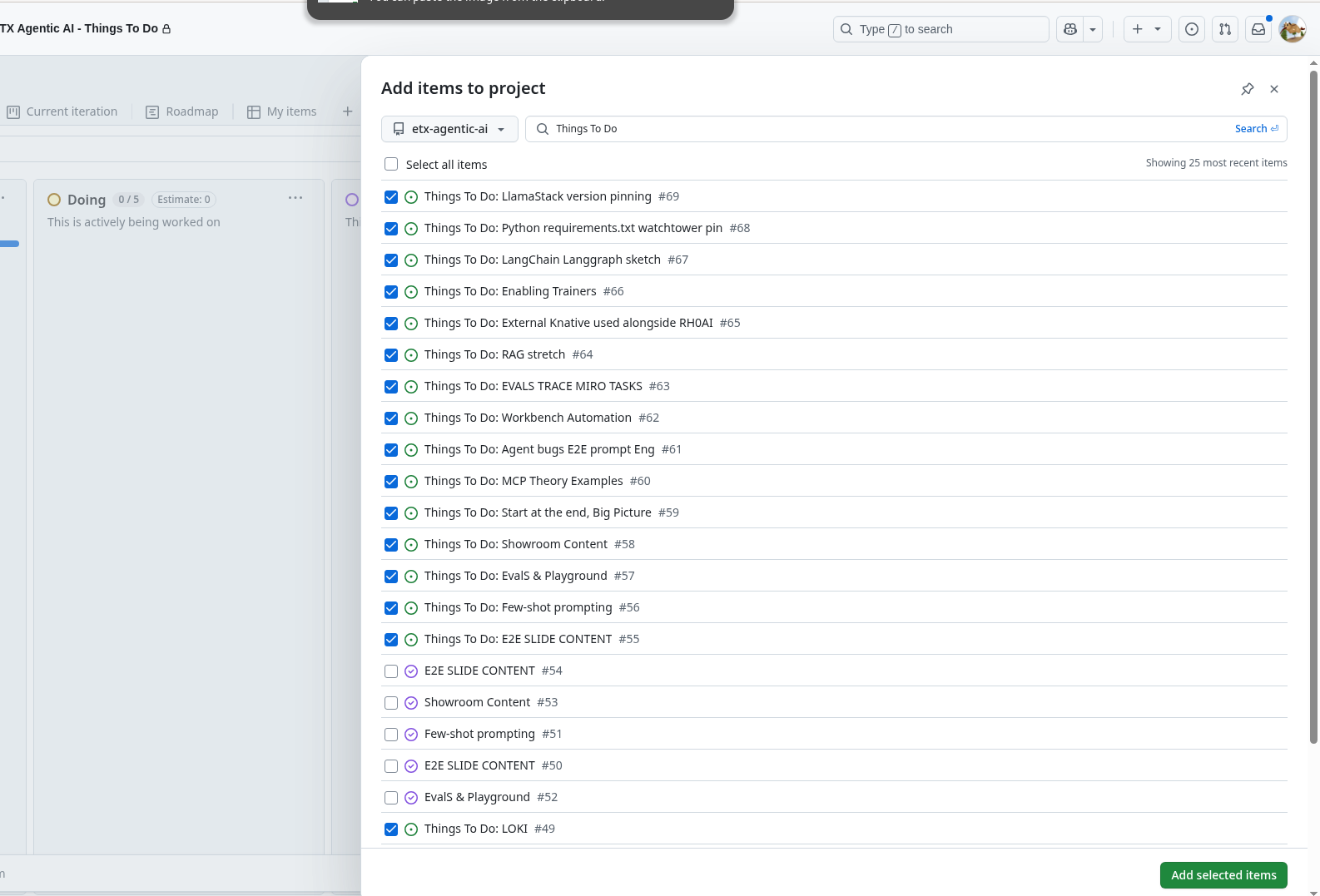

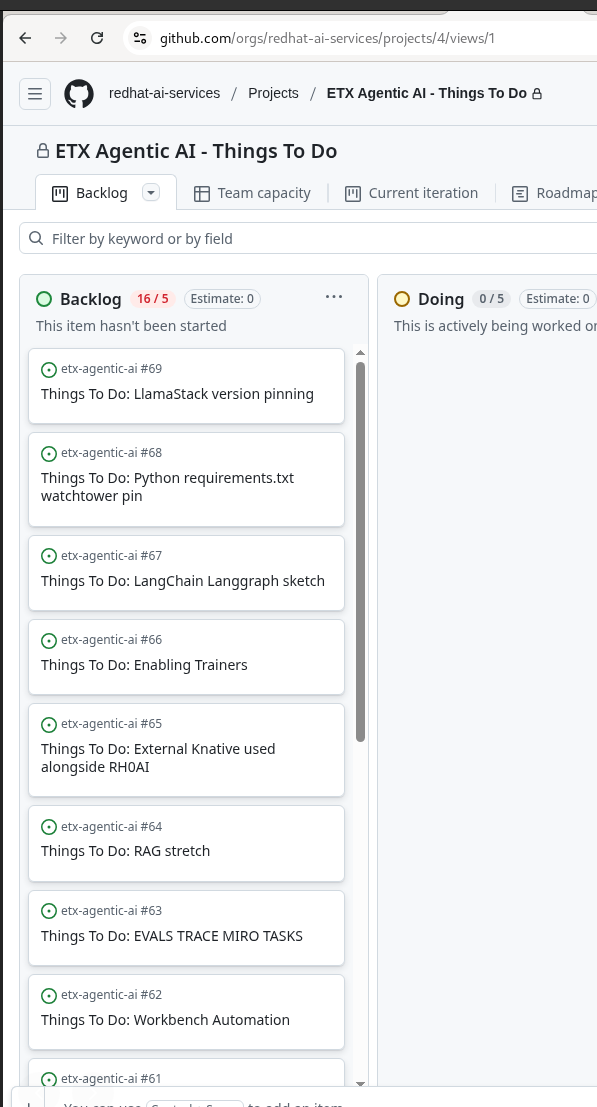

Add GitHub issues to the GitHub Project

Next step - go to the GitHub project and just select all the Things To Do issues we just created.

Vibe It - From a Picture of Post-It notes, using Agentic AI on RHOAI, to GitHub Project Issues

Thank you RedHat Emerging Tech Experience. 💓

Hope you Enjoy! 🔫🔫🔫

Older Posts archive.