@Extends

@Key(fields = "id")

public class Product {

private Review review;

// getters and setters not shown

}

Tag: quarkus

GraphQL Federation with Quarkus

16 June 2023

Tags : quarkus, graphql, apollo, api, teams

A canonical example using Quarkus GraphQL Federation and the Apollo Gateway server.

New Age of API’s

For some time now I have been following and using graphql as an API mechanism. If you have not heard of it, there are many great resources that talk about the benefits. Another great learning site is here. For me, some obvious benefits of GraphQL include:

-

eliminates over-fetching of data from an API

-

code a graphql API that can easily change without having to modify all the client code

-

you don’t end up with hundreds of REST API’s for every single use case that come up

In particular though - the composable nature of Federated graphql schemas is what catches my eye. Some early adopters like Netflix have blogged about their engineering efforts in this area.

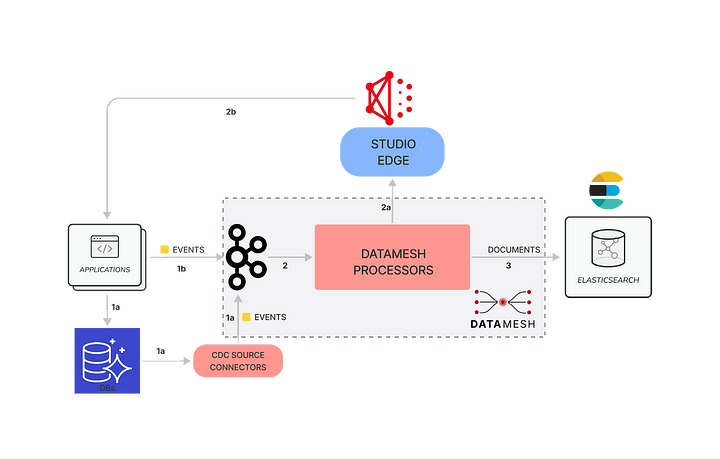

In particular checkout Netflix’s Studio Search and DataMesh blogs

By using a Federated Graph with graphql, different departments within the company can control and contribute independently the API’s that compose StudioSearch.

For me, this also fits in with a Team Topology view of the world - where individual stream aligned business teams can control their bits of the graph, whilst contributing to the whole fairly autonomously.

Issues of Code

Graphql was born in a nodejs ecosystem. But of course we ❤ Java, and in particular, Quarkus has a smallrye graphql️ implementation.

There has been a long-running RFE for adding GraphQL Federation to Quarkus. I would say in general things are looking pretty good these days with the addition of federation into the underlying smallrye implementation.

The other day, I was following this bug around resolving entities and was thinking to myself "this should work OK now!!" - so I went ahead and played around with the code. What resulted was a nice simple working example of graphql federation.

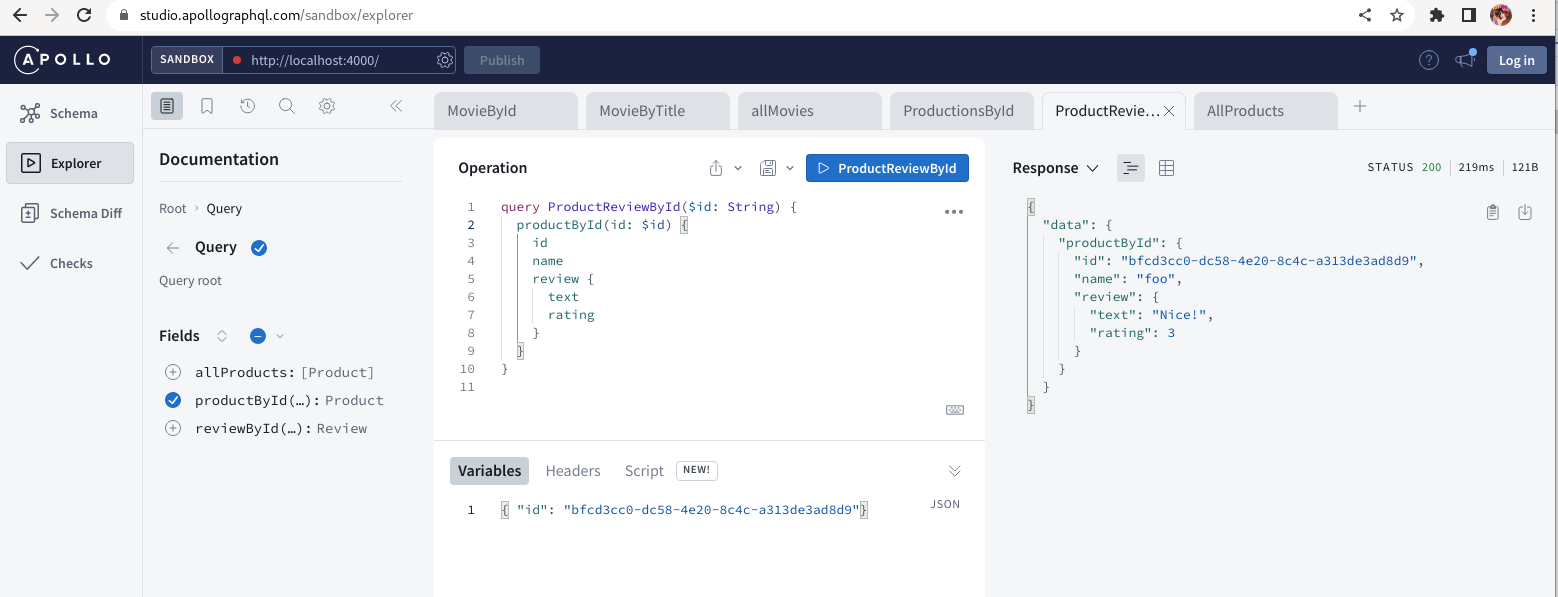

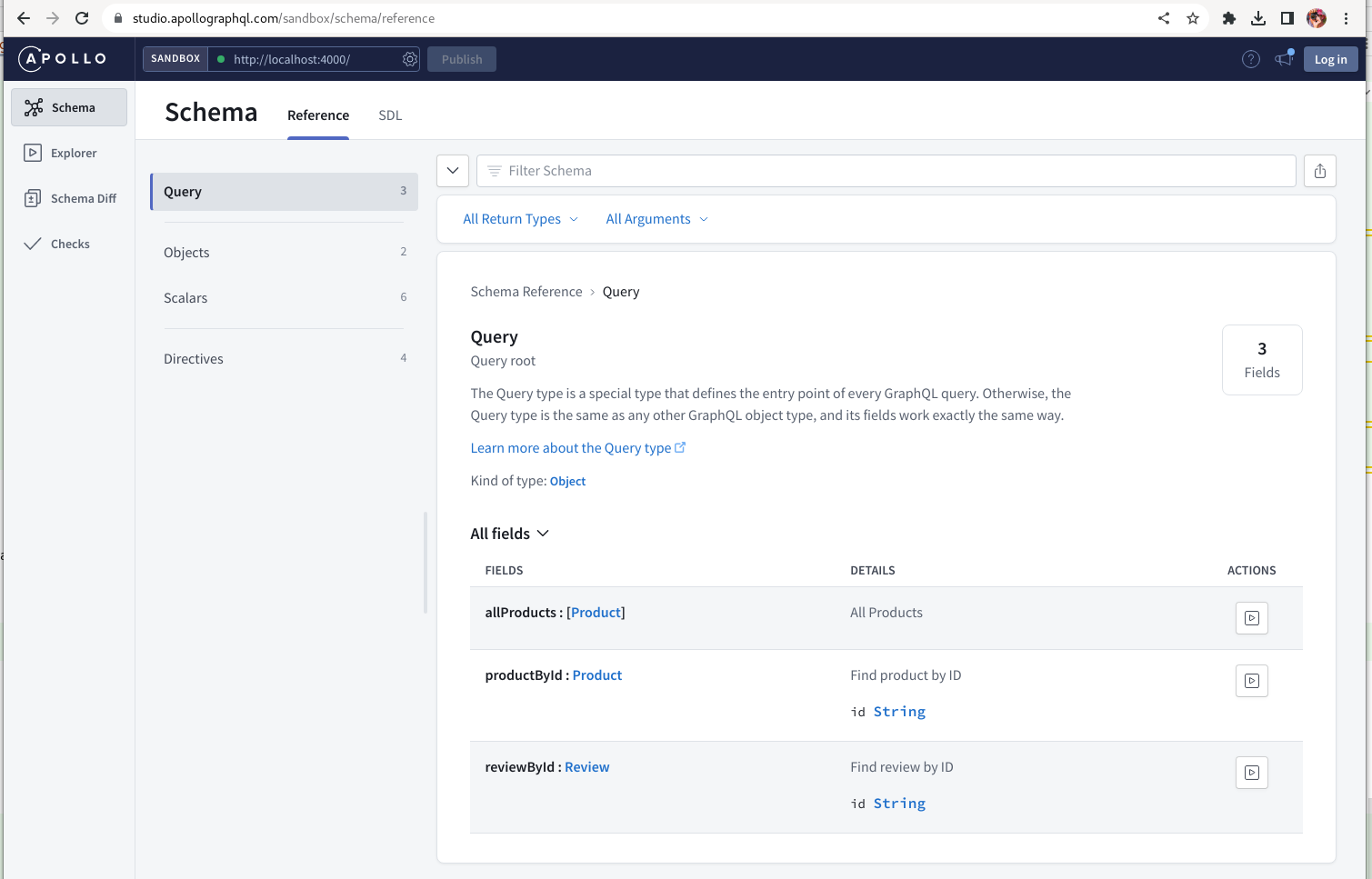

In the picture of Apollo’s Explorer - the Product{id, name} are sourced from the Product subgraph, and the Product{review} from the Review subgraph.

🤠 The Source Code is HERE so you can follow along. 🤠

The Example in Detail

Let me explain it a bit more in detail. There are three pieces to the simple architecture - a gateway component (apollo server), and two Quarkus graphql API’s (Product, Review).

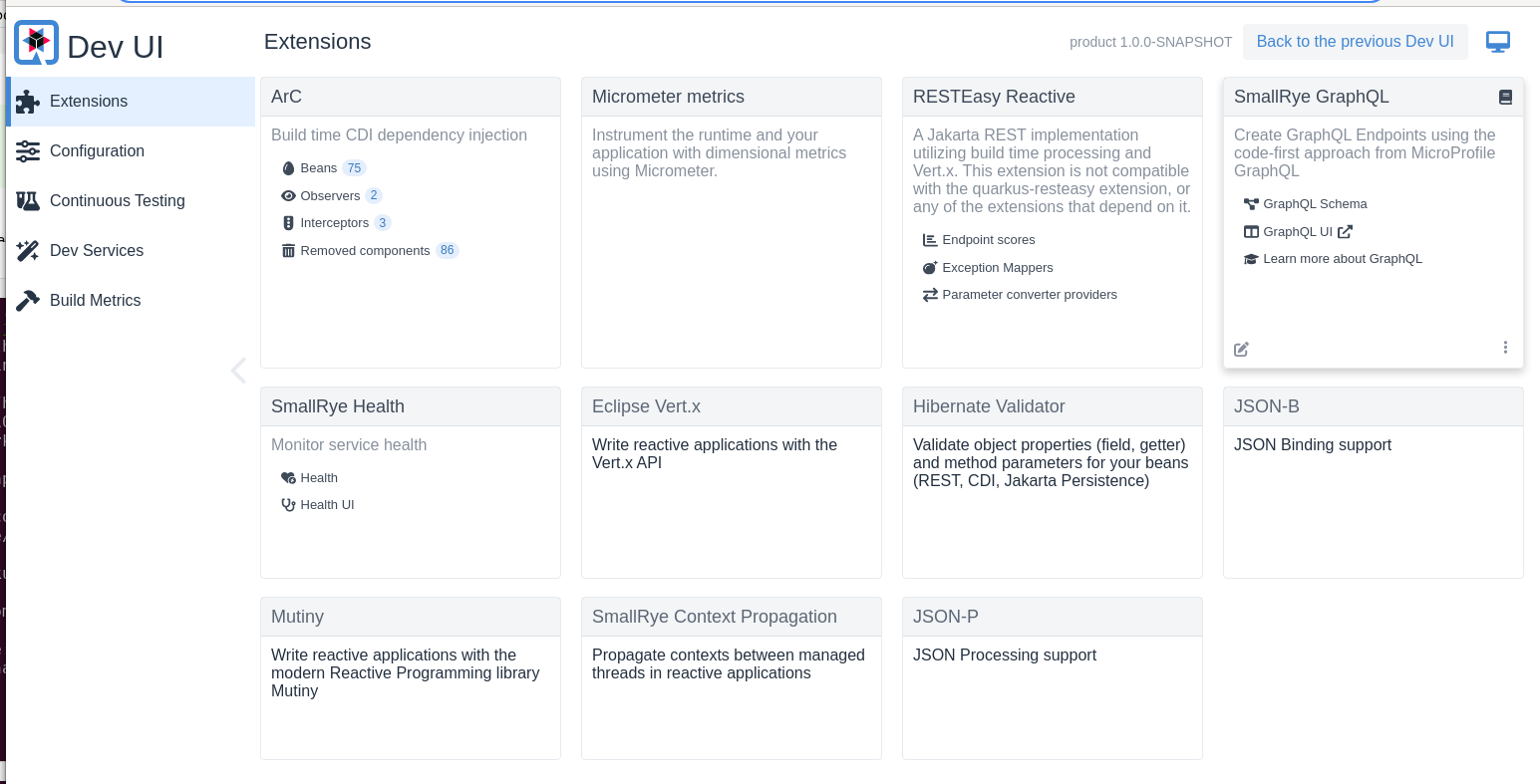

You can run the two Quarkus graphql API’s using maven, and for any one of them, browse to the Quarkus Developer UI by hitting the 'd' key in the terminal. For example:

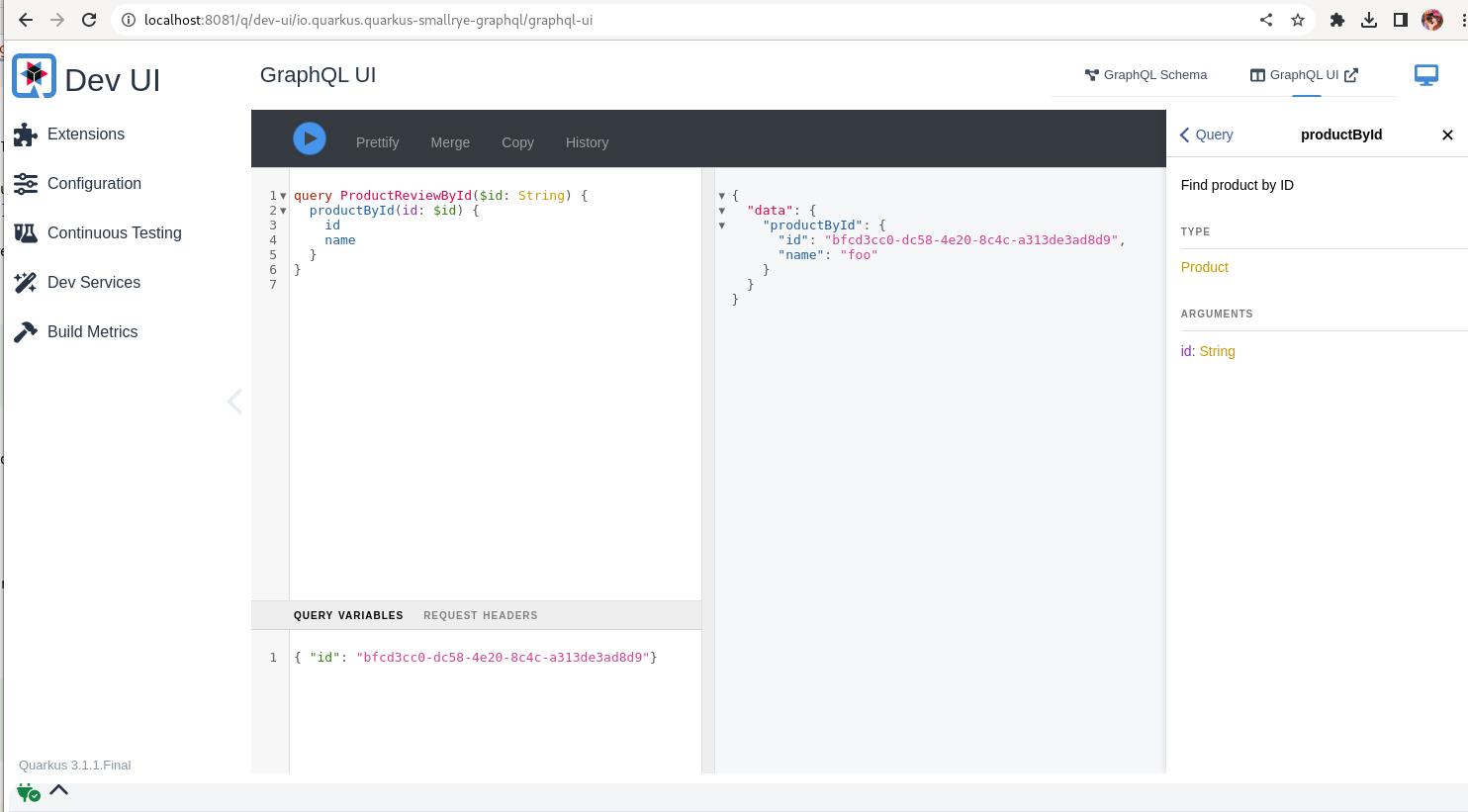

Use the Smallrye GraphQL panel to browse the individual schema, and to run individual queries using GrapQi against the single API e.g. for the Product api

we can run ProductByID and see the result for the fields we desire.

Now, in the Review service, we use a Federated Entity that you can read about here to extend the Product with a Review.

The key bit of Java code is the annotations that make this happen in the Review module’s Product domain model class i.e. @Extends and @Key:

In this way, we are extending the Product from the Product API with a Review.

We can query the individual graphql schemas in the DevUI or from the command line using curl.

Product API

$ curl http://localhost:8081/graphql/schema.graphql

union _Entity = Product

type Product @key(fields : "id") {

id: ID

name: String

}

"Query root"

type Query {

_entities(representations: [_Any!]!): [_Entity]!

_service: _Service!

"All Products"

allProducts: [Product]

"Find product by ID"

productById(id: String): Product @provides(fields : "id")

}

type _Service {

sdl: String!

}Review API

$ curl http://localhost:8082/graphql/schema.graphql

union _Entity = Product | Review

type Product @extends @key(fields : "id") {

id: ID

review: Review

}

"Query root"

type Query {

_entities(representations: [_Any!]!): [_Entity]!

_service: _Service!

"Find product by ID"

productById(id: String): Product

"Find review by ID"

reviewById(id: String): Review

}

type Review @key(fields : "id") {

id: ID

product: Product

rating: Int

text: String

}

type _Service {

sdl: String!

}We can see that the Product {id review} is provided by the Review API whilst the Product {id name} is provided by the Product API. You can also Query for Reviews and Products independently.

I have ommitted all the scalars and directives in the schema output above that are generated by setting these application properties:

quarkus.smallrye-graphql.schema-include-scalars=true

quarkus.smallrye-graphql.schema-include-directives=trueThese are required by the gateway when it introspects the grapql schema to compose the one Supergraph.

Now to the gateway piece, which is run using apollo nodejs:

gateway$ npm run start

> start

> nodemon gateway.js

[nodemon] 2.0.22

[nodemon] to restart at any time, enter `rs`

[nodemon] watching path(s): *.*

[nodemon] watching extensions: js,mjs,json

[nodemon] starting `node gateway.js`

🚀 Server ready at: http://localhost:4000/If you browse to the apollo endpoint, it will take you to the Sandbox which is very similar to the Quarkus GraphQi interface (the sandbox connects a websocket to your localhost:4000 port eventhough it appears on a cloud hosted url). It shows a nice schema view for the supergraph:

You can also query this using curl which is harder and a bit uglier:

$ curl -s -X POST http://localhost:4000/graphql -H "Content-Type: application/json" --data-binary '{"query":"{\n\t__schema{\n queryType {\n fields{\n name\n }\n }\n }\n}"}' | jq .

{

"data": {

"__schema": {

"queryType": {

"fields": [

{

"name": "allProducts"

},

{

"name": "productById"

},

{

"name": "reviewById"

}

]

}

}

}

}The important bit is this is the federated Supergraph .. so when we query for Product - we can ask for the Product {id name review} fields and the gateway resolves the entities for us, yay !!

The one little hack required for the Apollo code to work with Quarkus, it is documented in the README. Apollo does not support the newish application/graphql+json Mime Type yet despite PR’s ;) including one from me!

Hope you Enjoy! there is a wealth of fun stuff to code with using graphql (go lookup Mutations next!) 👾👾👾

Service Discovery and Load Balancing with Stork

25 May 2023

Tags : quarkus, service discovery, stork, load balancing, java

Stork is a service discovery and client-side load-balancing framework. Its one of those critical services you find out you need when doing distributed services programming. Have a read of the docs, it integrates into common open source tooling such as Hashi’s Consul as well as a host of others. Even-though OpenShift/Kubernetes has a built-in support for service discovery and load-balancing, you may need more flexibility to carefully select the service instance you want.

DNS SRV for Service Discovery

I wanted to try out good 'ol fashioned SRV Records as a means to testing out the client side service discovery in Stork. Many people forget that DNS itself supports service discovery for high service availability. It is still very commonly used, especially in mobile/telco.

My test case would be to create a DNS SRV record that queries OpenShift Cluster Canary Application endpoints. I’m using Route53 for DNS so you can read the SRV Records format here. The first three records are priority, weight, and port.

1 10 443 canary-openshift-ingress-canary.apps.sno.eformat.me

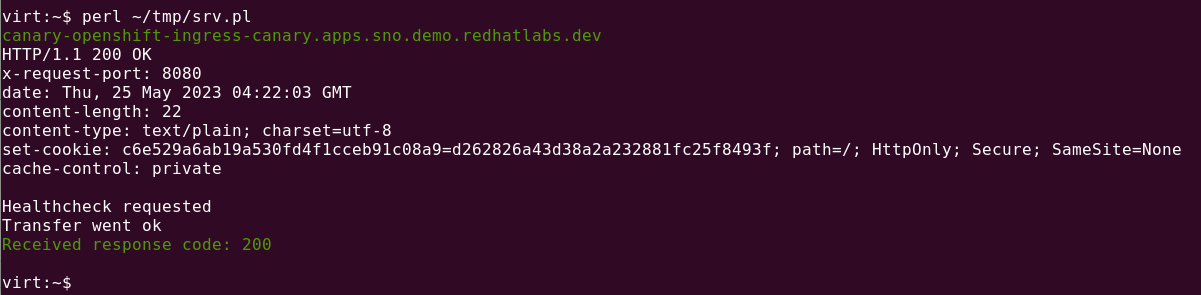

1 10 443 canary-openshift-ingress-canary.apps.baz.eformat.meIf you curl one of these, you get a Healthcheck requested back if the service is running.

curl https://canary-openshift-ingress-canary.apps.sno.eformat.me

Healthcheck requestedSo, in my example, you can get a full list of SRV record values by querying:

dig SRV canary.demo.redhatlabs.devCoding a Quick Client

For a quick and dirty client to make use of the SRV record I reach out for my favourite tools, yes Perl 🐫🐫🐫 !

Let’s query the SRV record and see if my OpenShift clusters are healthy.

# sudo dnf install -y perl-Net-DNS perl-WWW-Curl

use Net::DNS;

use WWW::Curl::Easy;

use Term::ANSIColor qw(:constants);

sub lookup {

my ($dc) = @_;

my $res = Net::DNS::Resolver-> new;

my $query = $res->send($dc, "SRV");

if ($query) {

foreach $rr ($query->answer) {

next unless $rr->type eq 'SRV';

# return first found

return $rr->target;

}

} else {

print("SRV lookup failed: " . $res->errorstring);

}

return;

}

my $host = lookup("canary.demo.redhatlabs.dev");

print GREEN, $host . "\n", RESET;

my $curl = WWW::Curl::Easy->new;

$curl->setopt(CURLOPT_HEADER,1);

$curl->setopt(CURLOPT_URL, 'https://' . $host);

$curl->setopt(CURLOPT_SSL_VERIFYHOST, 0);

my $retcode = $curl->perform;

if ($retcode == 0) {

print("Transfer went ok\n");

my $response_code = $curl->getinfo(CURLINFO_HTTP_CODE);

print(GREEN, "Received response code: $response_code\n", RESET, "\n");

} else {

print(RED, "An error happened: $retcode ". RESET . $curl->strerror($retcode)." ".$curl->errbuf."\n");

}Of course feel free to run this in a loop :) because each record is equally weighted in the SRV you will get a round-robin behaviour.

So, looking good so far.

Stork and Java

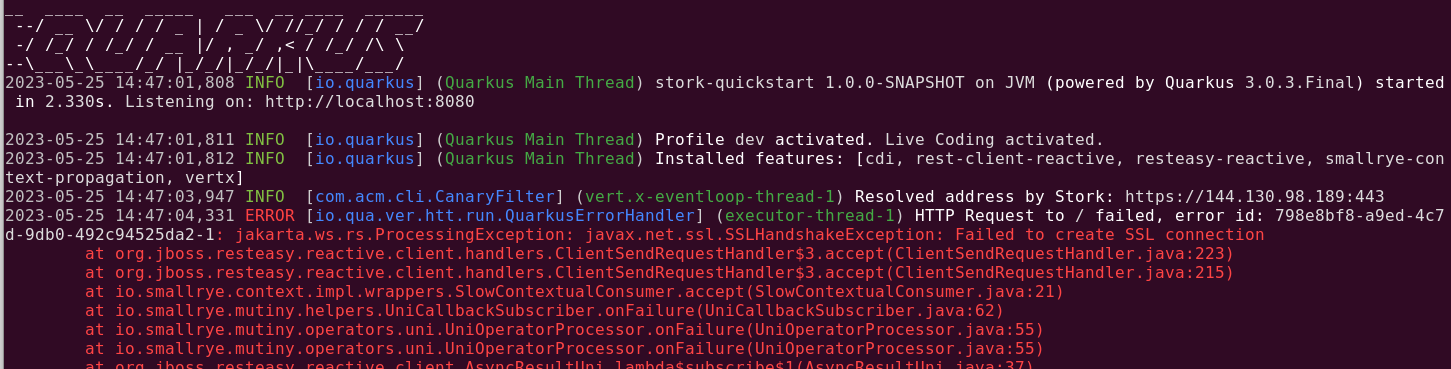

Of course, the whole point was to try out Stork. Following the Quarkus Stork getting started guide, I used a simple rest client service

and configured the canary stork service as follows:

Unfortunately, this didn’t work as I expected! The SRV record values were resolved to IP addresses instead of returning the DNS name for me to query. The

issue with just an IP address is that Routing in OpenShift requires the HEAD/Location to be set properly so the correct endpoint Route can be routed and queried using HAProxy.

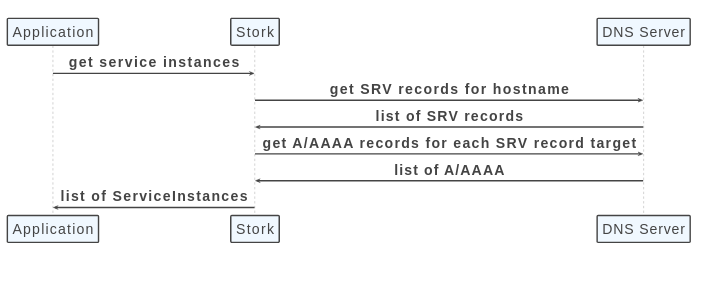

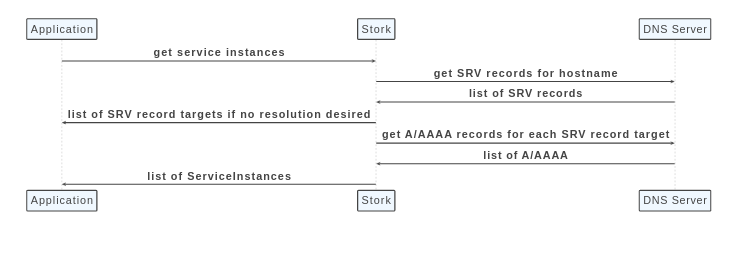

The Stork documentation spells out this DNS resolution process:

Looking at the source code, led me to submit this PR which adds in an option so that you can skip the DNS resolution step.

So, adding this property using the new version of the Stork library:

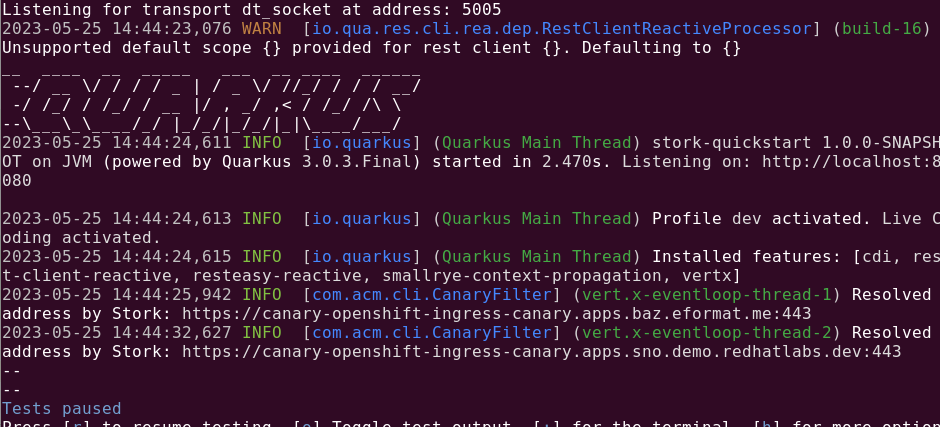

quarkus.stork.canary.service-discovery.resolve-srv=falseLeads to the DNS names being returned and no the ip addresses:

Trying out the code and now the Service call works as expected:

YAY ! 🦍 Checkout the source code here and watch out for the next version of Stork !

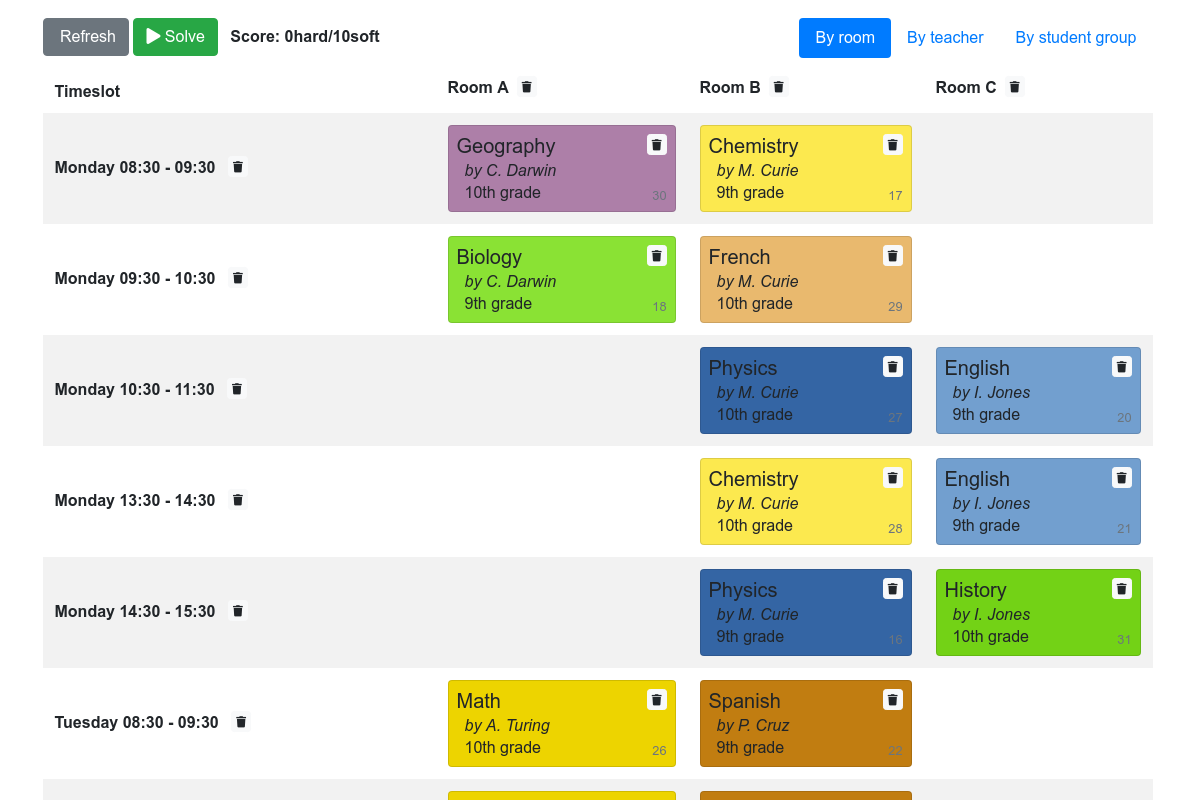

AI Constraints Programming with Quarkus and OptaPlanner

04 November 2022

Tags : quarkus, constraints, optaplanner, java

AI on Quarkus: I love it when an OptaPlan comes together

I have been meaning to look at OptaPlanner for ages. All i can say is "Sorry Geoffrey De Smet, you are a goddamn genius and i should have played with OptaPlanner way sooner".

So, i watched this video to see how to get started.

So much fun ! 😁 to code.

There were a couple of long learnt lessons i remembered whilst playing with the code.

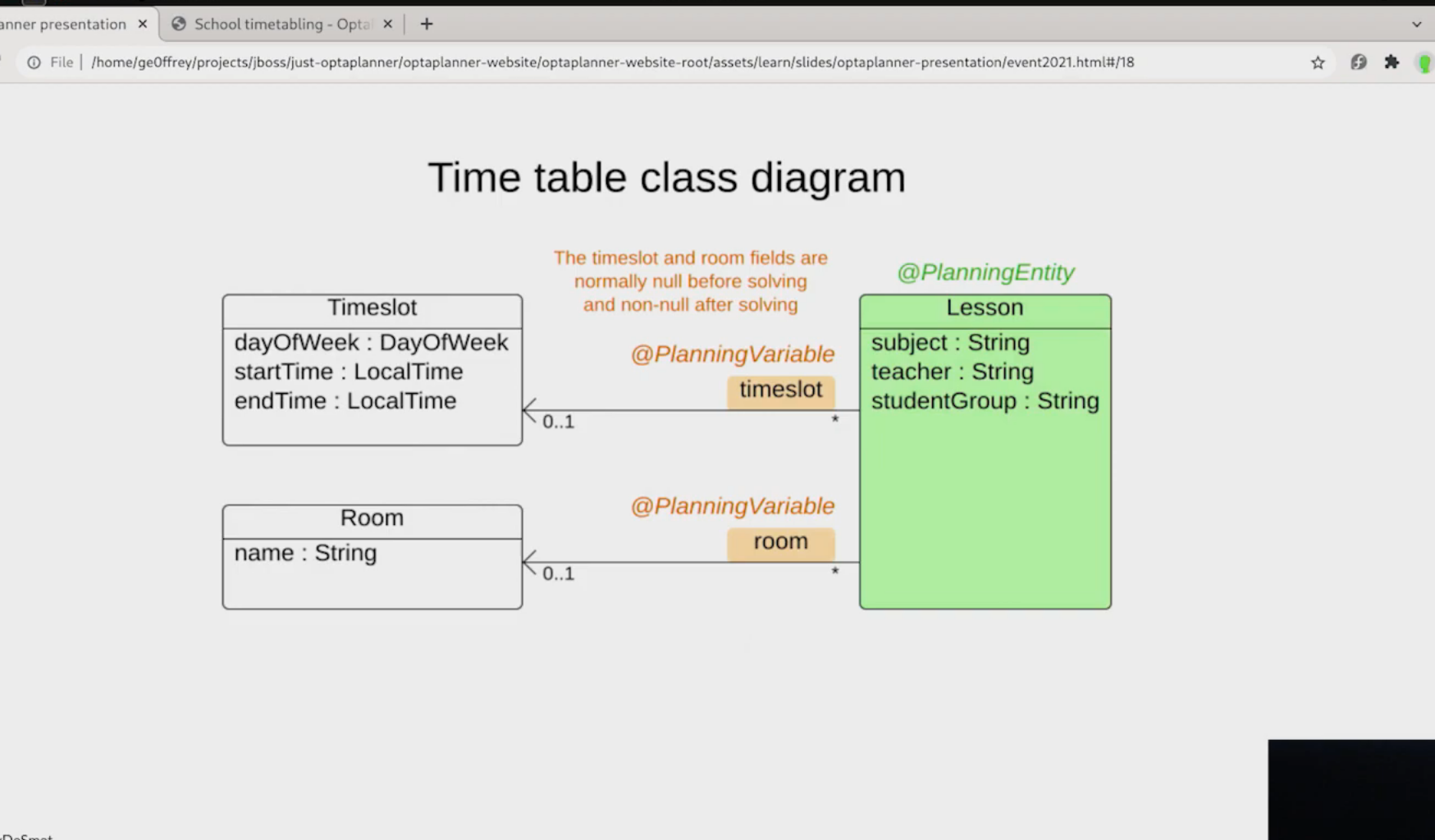

(1) Domain Driven Design

To get at the heart of constraints programming you need a good object class hierarchy, one that is driven by your business domain. Thanks Eric Evans for the gift that keeps giving - DDD (and UML) is perfect to help you out here.

You need to have a clean and well thought out class heirarchy so that wiring in OptaPlanner will work for you. I can see several iterations and workshop sessions ensuing to get to a workable and correct understanding of the problem domain.

(2) Constraints Programming

I went looking for some code i helped write some 15 years ago ! A Constraint based programming model we had written in C++

We had a whole bunch of Production classes used for calculating different trades types and their values. You added these productions into a solver class heirarcy and if you had the right degrees of freedom your trade calculation would be successful. The beauty of it was the solver would spit out any parameter you had not specified, as long as it was possible to calculate it based on the production rules.

OptaPlanner viscerally reminded me of that code and experience, and started me thinking about how to use it for a similar use case.

I am now a fan 🥰

One last lesson from the OptaPlanner crew was their use of a a new static doc-generation system, their docs are a thing of beauty i have to say, JBake which I am using to write this blog with. Thanks for all the fish 🐟 🐠 Geoff.

OptaPlanner Quickstarts Code - https://github.com/kiegroup/optaplanner-quickstarts