Tag: streaming

Pulsar Flink

02 November 2022

Tags : streaming, pulsar, flink, java

Pulsar Flink

I have been messing around with yet another streaming demo (YASD). You really just cannot have too many. 🤩

I am a fan of server sent events, why ? because they are HTML5 native. No messing around with web sockets. I have a a small quarkus app that generates stock quotes:

that you can easily run locally or on OpenShift:

oc new-app quay.io/eformat/quote-generator:latest

oc create route edge quote-generator --service=quote-generator --port=8080

and then retrieve the events in the browser or by curl:

curl -H "Content-Type: application/json" --max-time 9999999 -N http://localhost:8080/quotes/stream

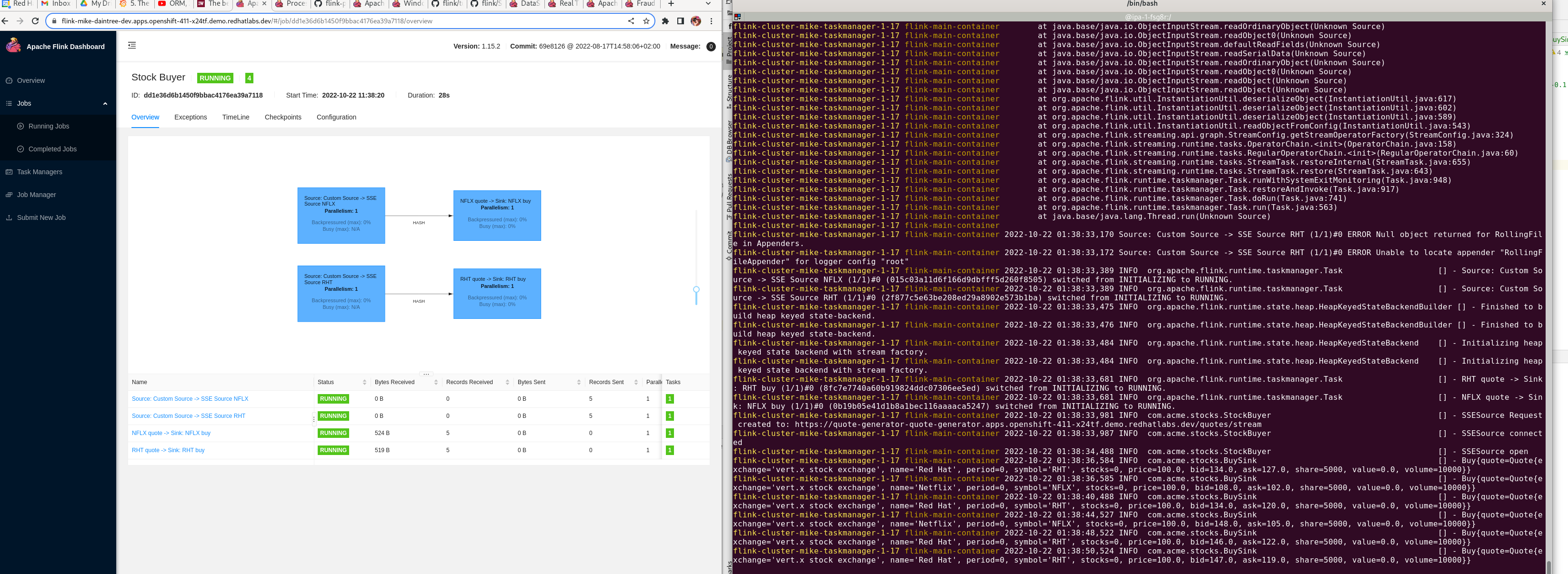

So, first challenge - How might we consume these SSE’s using Flink? I found a handy AWS Kinesis SSE demo which i snarfed the SSE/OKHttp code from. I wired this into flinks RichSourceFunction:

So now i could consume this SSE source as a DataStream

In the example, i wire in the stock quotes for NFLX and RHT. Next step, process these streams. Since i am new to flink, i started with a simple print function, then read this stock price example from 2015! cool. So i implemented a simple BuyFunction class that makes stock buy recommendations:

Lastly, it needs to be sent to a sink. Again, i started by using a simple print sink:

Friends of mine have been telling me how much more awesome Pulsar is compared to Kafka so i also tried out sending to a local pulsar container that you can run using:

podman run -it -p 6650:6650 -p 8081:8080 --rm --name pulsar docker.io/apachepulsar/pulsar:2.10.2 bin/pulsar standalone

And forwrded to pulsar using a simple utility class using the pulsar java client:

Then consume the messages to make sure they are there !

podman exec -i pulsar bin/pulsar-client consume -s my-subscription -n 0 persistent://public/default/orders

And i need to write this post as well .. getting it to run in OpenShift …

Source code is here - https://github.com/eformat/flink-stocks